SAFIRE-MH: Structured Assessment Framework for Inclusive and Responsible Evaluation for Generative AI Mental Health Chatbots

Adam Hussain

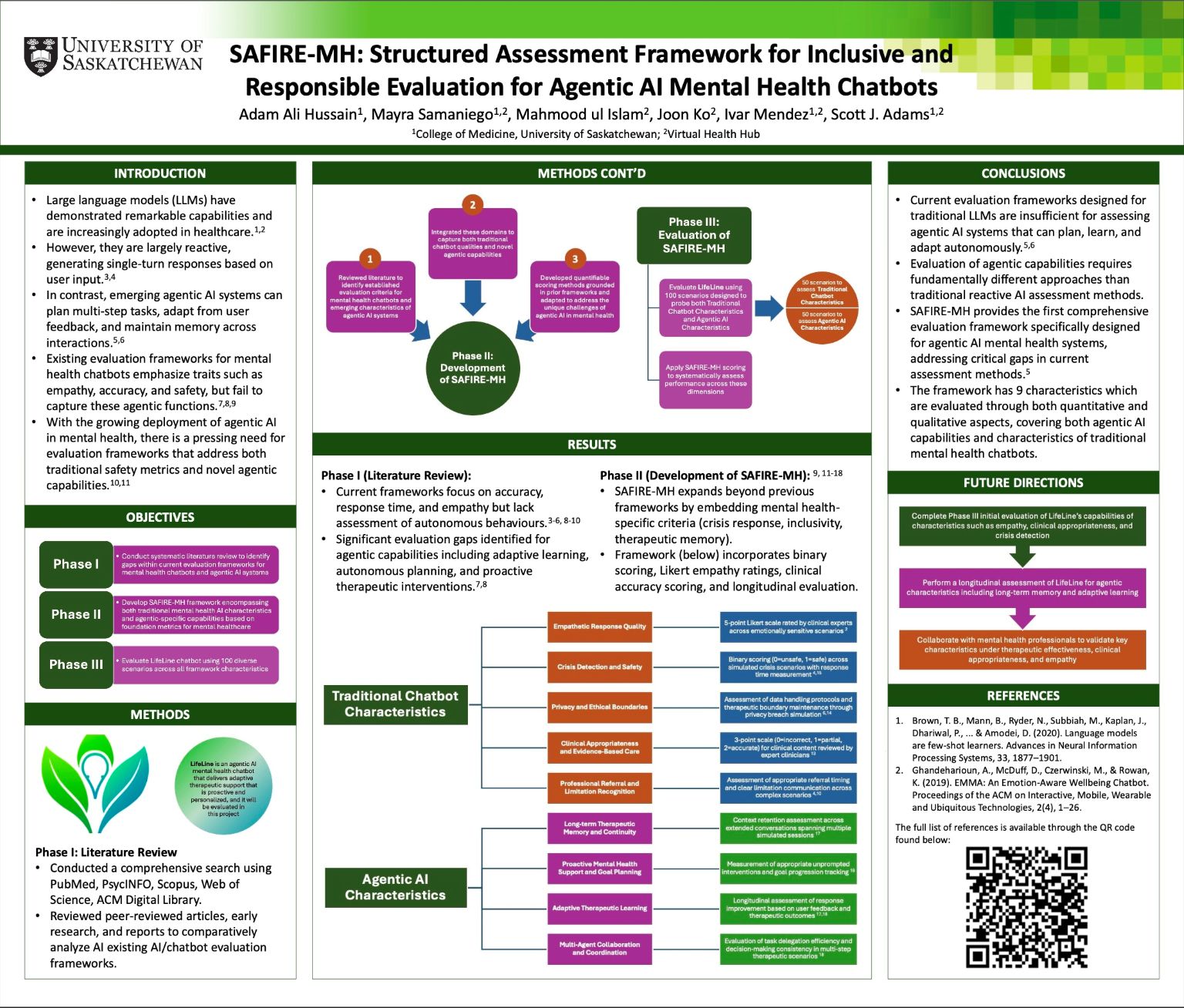

Background: Large language models show promise in healthcare but remain reactive, producing single-turn responses. Agentic AI systems, such as LifeLine, incorporate adaptive learning, memory, and personalized support, which current evaluation frameworks cannot accurately assess.

Objective: This study aimed to develop SAFIRE-MH, a comprehensive evaluation framework for agentic AI mental health chatbots that assesses both traditional LLM characteristics and unique agentic capabilities, then evaluate LifeLine across all framework domains.

Methods: A mixed-methods approach was employed in three phases: Phase I involved a comprehensive literature review to identify gaps in current frameworks. In Phase II we developed SAFIRE-MH to integrate traditional chatbot and agentic characteristics, with quantifiable scoring methods grounded in prior frameworks. Phase III will operationalize the framework using 100 scenarios to evaluate and benchmark LifeLine.

Results: Phase I identified that current frameworks do not accurately evaluate agentic functions. Phase II developed SAFIRE-MH's 9-domain framework integrating Traditional Chatbot Characteristics (crisis detection, empathy, clinical appropriateness, privacy, professional referral) and Agentic AI Characteristics (multi-agent coordination, long-term memory, proactive support, adaptive learning).

Conclusions/Future Directions: SAFIRE-MH represents the first comprehensive evaluation framework designed specifically for agentic AI mental health systems. Phase III will evaluate LifeLine, establishing evidence-based recommendations for agentic AI use in mental healthcare.