Program Evaluation Framework and Planning Guidelines

Categories: evaluation framework planning guidelines Physical Therapy

Reviewed by: Program Planning and Resource Advisory Committee

Approval Date: September 2008

Revision Dates: October 2013, June 2014, September 2014

This document describes the Program Evaluation Framework that guides the collection and analysis of data to support high quality decision making relative to meeting the Mission of the School of Physical Therapy. Although the primary focus of the framework and guidelines for evaluation is currently related to the Master of Physical Therapy (MPT) program, the intent is that the evaluation program can be adapted to accommodate changing and expanding mandates of the School and information requirements of faculty members, staff, students and other internal and external stakeholders.

The Program Planning and Resource Advisory Committee (PPRAC) of the School is mandated to direct activities related to accreditation and program review and coordinate various elements of program planning. The School’s management and administrative team ensures the components of the evaluation framework are carried out in a timely manner. This framework and guidelines document is reviewed regularly, and provides ongoing direction to evaluation activities while ensuring appropriate attention and resources are in place to address program evaluation needs in the School.

1. Guiding Principles for Directing Evaluation

Program evaluation at the School of Physical Therapy will be:

- Aligned with requirements as defined by the University, College of Medicine, College of Graduate Studies and Research, and School Mission statementa and organizational values

- Based on collaboration with the School of Physical Therapy Faculty Council and Program faculty, staff and students

- Aligned with accepted external standards (e.g., accreditation)

- Directed at ensuring that the programs of the School evolve to meet the ongoing needs of the environment

- Based on appropriate collection and analysis of information for monitoring outcomes, considering change, progressing and evolving programming

- Reviewed regularly (every three years to four years) by the PPRAC

In some cases, specific requests are made that influence evaluation methods/processes. The following organizations are considered important partners and the School of Physical Therapy works with these organizations to ensure that evaluation methods are consistently reviewed to ensure that we maximize efficiencies in data collection so that data are appropriate for various purposes.

- Professional Accreditation (PEAC) – mandatory external review

- Canadian Council of Physiotherapy University Programs (CCPUP)

- Canadian Physiotherapy Association

- Alliance of Physiotherapy Regulators

- Saskatchewan College of Physical Therapists

- National Physiotherapy Advisory Group

- Saskatchewan Academic Health Science Network

- Government (e.g., Department of Advanced Education, Employment and Immigration, Department of Health)

- Regional Health Authorities and Clinical Education Facilities

Committees and faculty members, staff and students are provided with information related to performance indicators that align with requirements of program/organization on an ongoing basis. The information is provided regularly through committee reports. In addition, both the Director and the Associate Dean bi-annually report on overall program priorities and outcomes. External sources of information such as graduate outcomes in national exams are provided to committees and Faculty Council to include in planning and decision-making activities.

The School has in-house expertise (faculty, staff) related to measurement methods, database management (data collection, analysis and interpretation) and access to other sources of information/expertise in the College (i.e. financial) and University (i.e. Integrated Planning).

2. Evaluation Framework

A program evaluation model (Payne, 1994) has been used to describe steps relevant to the School of Physical Therapy MPT evaluation. Steps in the evaluation process include:

- Specify, select, refine evaluation objectives (determine indicators)

- Establish standards/criteria (performance measures) where appropriate

- Plan appropriate evaluation design

- Select and/or develop data gathering methods

- Collect relevant data

- Process, summarize, analyze relevant data

- Contrast data with evaluation standards/criteria

- Report and provide feedback on results

- Assess cost-benefit/effectiveness

- Reflect on (evaluate) the evaluation

Throughout steps 1 through 10, consideration is also given to how to manage such things as tasks, resources, personnel, and other. In addition, faculty and staff involved in evaluation reflect on questions such as: why are we collecting data, is the data valid, can we triangulate the data, how can the data inform decision-making and if changes are made to the program or curriculum as a result of the analysis what is the impact of these changes. Throughout this process, those involved need to ensure that the right people get the right information, in the right format, at the right time.

3. Components of Program Review

During the planning and implementation phases of the MPT program, considerable attention was devoted to developing structures and building capacity to support program evaluation. It is important to note, however, that program evaluation methods and tools are constantly advancing. In general, when describing program evaluation, consideration has been given to inputs, activities, and outputs and outcomes (see Glossary of Terms at end of document). In addition, the School recognizes the importance of constantly reflecting on the resources/costs related to the evaluation process. In some cases the costbenefit of the evaluation processes themselves may be questioned. The components of Program Review at the School of Physical Therapy include:

- Performance indicators

- Group or committee-led focused evaluation (eg. admission, administration, student performance, curriculum)

- Periodic evaluations

- Comprehensive program evaluations (accreditation, graduate program review)

3.1. Performance Indicators

In 2010, the Director led the School in the development of a concise set of performance indicators in each of the following categories: a) student body, b) student experience, c) research, scholarly and artistic work, and d) working together and engaged university (see Appendix 1 – Performance Indicators). Each of these indicators can be linked/aligned with the School of Physical Therapy Mission. The indicators are modeled on the University’s set of performance indicators and allow all stakeholders to get a snap shot view of the success of the School in meeting its mandate. Clear and measurable goals (see Appendix 2 – Clear and Measurable Goals) pertaining to: a) student body; and b) student experience in the MPT Program have been developed based on these indicators, and future standards will continue to be established for performance indicators in other categories (eg. research, scholarly and artistic work).

In developing performance indicators, the School considered the University’s five principles related to achievement records and indicators:

- The achievement record should be widely discussed and collaboratively used

- The achievement record should support multiple purposes

- To serve its varied purposes, the achievement record should be simple

- Indicators ought to measure, as much as practical, the things that are meaningful or important to the organization

- Initially, the University of Saskatchewan should focus on a practical set of indicators, leaving more elaborate development for later if needed.

3.2 Committee-Led Focused Evaluation

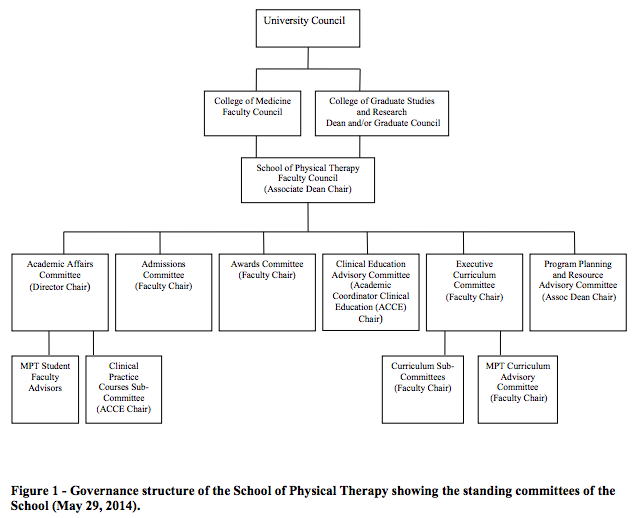

In addition to program-wide evaluation, detailed and focused group or committee – led evaluation is used to assess that processes and outcomes are of acceptable quality. The committee structure of the School (see Figure 1) is used to full advantage in scrutinizing key areas of concern for the School: admissions, curriculum, student performance, and administrative procedures.

Examples of committee-led review are:

- Curricular Review: Ongoing development and evaluation of the curriculum requires detailed data (e.g., module, exit, graduate and employer evaluations) which contribute to and are integrated with Program Review. This component of program evaluation has been delegated to the Executive Curriculum Committee (See Appendix 3 – Curriculum Evaluation). The Executive Curriculum Committee develops innovative evaluation tools and implements strategies by which analysis of information can be shared with students, faculty and staff.

- Academic Monitoring: Academic Affairs Committee develops processes and tools to monitor student performance throughout the program with attention to early recognition of academic, clinical and/or professional issues.

- Admissions: Evaluation of admissions processes (i.e. Application process, Multimini interview) related to inputs and outcomes that inform ongoing development.

- Administration Processes and Practices: The School participates in and responds to College and University led evaluation (e.g. financial processes audit, operational review of staff allocation, etc.).

3.3 Periodic Evaluation

The School must have established systems/capacity to effectively respond to periodic requests (e.g., graduate numbers, location of employment of graduates, graduate satisfaction, graduate outcomes, faculty research activities, program costs, applicant pool etc). Periodic evaluation at the School utilizes external processes (e.g. Physiotherapy Competency Examination (PCE)) and external requirements (e.g. PEAC) and internal processes at the university level (e.g. collegial processes) and internal requirements (e.g. Graduate Program Review (GPR)). In addition periodic evaluation uses several tools to evaluate program quality and effectiveness (See Appendix 4 – Tools).

The committees of the School participate in the design, administration, data dissemination, data analysis, action planning, and reporting (see Table 1). Fundamental to periodic program evaluation are the:

- identification of performance indicators

- development of evaluation tools (e.g., exit, graduate, and employer surveys)

- data collection, analysis and interpretation

- generation, review and dissemination of appropriate reports

- development of action plans based on relevant data

- implementation of action plans

- monitoring and evaluation of impact(s) of change(s) made

Table 1: Program Evaluation and Reporting

This table describes the responsibilities that various entities and committees within the School have with respect to each of the program evaluation tools (surveys, reports). It also describes how data arising from the evaluation tools are processed, analyzed and reported.

| Who | task | Reporting (to whom, what) |

|---|---|---|

|

Management and Administrative Staff Associate Dean, Director and Administrative Staff (Administrative Coordinator, Executive Assistant, Academic Program Assistant, Clerical Assistant) |

|

PPRAC:

Faculty Council

OTHER:

|

|

PPRAC |

|

ANNUAL REPORT TO FACULTY COUNCIL

MANAGEMENT

REPORTS TO EXTERNAL REVIEW

|

|

Executive Curriculum Committee (ECC) |

|

MANAGEMENT

REPORT TO FACULTY COUNCIL

REPORTS TO EXTERNAL REVIEW

|

|

Other Committees (Awards, Admissions, Academic Affairs, Clinical Education Advisory committee/Clinical Education Unit) |

|

MANAGEMENT

REPORT TO FACULTY COUNCIL

REPORTS TO EXTERNAL REVIEW

|

|

Evaluation Advisory Group |

From time to time, an evaluation assessment advisory group may be struck by Administration to evaluate the full process - gaps, overlap, related to surveys etc. |

MANAGEMENT |

|

Faculty Council |

|

3.4 Comprehensive Program Evaluation and Development

In keeping with University and external requirements, the School undertakes regular comprehensive program review. A variety of information sources and evaluation tools based on a logic model linking inputs, activities, outputs/outcomes are used. The School of Physical Therapy uses this approach for strategic planning undertaken as part of the four-year University Integrated Planning cycle, or when major changes are planned (i.e. curriculum redesign). This process involves a review of the mission, vision, values and objectives of the School with consideration of internal inputs (Physical Therapy faculty, staff, and students) College and University alignment (i.e. University Integrated Planning) and external/environmental needs (i.e. PEAC, Health care system, profession). Because of the scope and depth of these reviews, a high level of coordination is needed; thus the leads for this level of review are PPRAC, Associate Dean and the Director. Reporting is provided to relevant agency and internal stake holders (SPT Faculty Council, Dean of College of Medicine, Dean of College of Graduate Studies and Research).

The major recurring comprehensive program review activities are:

- Accreditation - PEAC (every 5 to 7 year cycle)

- University of Saskatchewan Systematic Program Review - GPR (every 4 to 5 year cycle)

- Integrated Planning – School, College and University Plans (4 year cycle)

4. Summary

The School of Physical Therapy places a high value on program evaluation and sees it as a mechanism to drive program development and improvement, which advances achievement related to our mission and goals. Program Evaluation is consistent with a theoretical framework (Payne, 1994). The Program involves an integrated planned activity with assignment of duties related to administration and analysis. Resources are assigned to collecting valid data. The program is constantly evolving and therefore information needs and frequency of data collection are reviewed at regular intervals. Performance indicators have been developed to measure our progress with clear and measurable goals relating to key performance indicators, which enables consistent analysis of Program outcomes over time.

Glossary of Terms

- Activities

- Performance of tasks resulting in outputs; also referred to as processes.

- Performance Indicators

- Standardized, quantitative indicators of activity. Performance indicators should be acceptable, understood, meaningful and measurable.

- Inputs

- Resources ranging from personnel, financial, staffing, materials.

Note. Inputs required for program evaluation include: School budget, personnel, space, equipment and educational resources (IT, library). Information comes from a variety of sources including equipment inventory, University operating budget, etc. Examples of resources/costs of evaluation include: salaries/wages; training/development (meetings, materials); data collection (evaluation tool development/purchases; IT services/software and equipment/consultants; printing); data processing and analysis (equipment/facilities software); reporting/communication (printing, web-based costs, meetings, communication (mail, phone, fax)). - Outcomes/Outputs

- Product or endeavours including student learning and competency development, research programs/publications, graduate employment, etc. (note: outputs are sometimes referred to as immediate deliverables; impact may also be used in place of outcomes and usually considers a lasting change often occurring over longer period of time).

References

Harvard Family Research Project, Indicators: Definition and Use in a Results-Based Accountability System, Karen Horsch (1997) http://www.gse.harvard.edu/hfrp/pubs/onlinepubs/rrb/indicators.html

Payne, D. A. (1994). Designing Educational Project and Program Evaluations: A Practical Overview Based on Research and Experience. Kluwer Academic Publishers. Boston.

Project Evaluation Toolkit, Centre for the Advancement of Learning and Teaching (CALT), University of Tasmania http://www.utas.edu.au/pet/sections/frameworks.html

University of Saskatchewan Achievement Record 2010: http://www.usask.ca/achievementrecord/

ProVention Consortium, Monitoring and Evaluation Sourcebook

Appendix 1: Performance Indicators

Performance indicators are defined as standardized, quantitative indicators of activity. They should be acceptable, understood, meaningful and measurable. The following performance indicators have been developed by the School of Physical Therapy with input from all standing committees and are framed using the structure used by University of Saskatchewan.

1. Student Body

- Description:

- Student profile of MPT students

- MPT Applicants

- Number of applicants

- Aboriginal ancestry

- MPT Students admitted (n = 40)

- Grade point average (GPA) mean

- GPA minimum

- Aboriginal ancestry

- MPT Applicants

- Student profile of other graduate students

- MSc in program

- PhD in program

- Post-Doctoral Fellows

- Student profile of MPT students

- Relevance:

- Admission due diligence: selection of best candidates

- Caliber

- Admission equity

- Access

- Inclusion in aggregate student body data (U of S, CGSR)

- Trends over time

2. Student Experience

MPT Students

- Description:

- Learning and learning outcomes

- Graduates who responded favorably to preparedness for entry-to-practice in PT

- Employers who responded favorably to graduates’ preparedness for entry-topractice in PT

- Percentage of graduates who pass written PCE

- Percentage of graduates who pass practical PCE

Student outcomes

- Number of graduates per academic year

- Percentage of graduates employed in PT (who wish to be employed in PT)

- Learning and learning outcomes

- Overall satisfaction

- Percentage of students satisfied with entire PT educational experience

- Percentage of graduates satisfied with the entire PT educational experience

- Percentage of graduates who would recommend the U of S MPT program to others

- Relevance:

- Student satisfaction with program content/delivery/resources

- Employability of graduates

Other Graduate Students

- Description:

- Number of MSc graduates

- Number of PhD graduates

3. Research, Scholarly and Artistic Work

- Description:

- Research funding

- Total External funding secured as PI or Co-Investigator (total $)

- Total funding as PI ( $)

- Collaborative research (TBD)

- Research activity

- Number of funded projects in which PT faculty/staff participated

- Number of applications for funded projects in which PT faculty/staff participated

- Knowledge translation

- Number of academic publications in which faculty/staff are primary authors or co-authors

- Number of papers by faculty/staff presented at conferences

- Faculty awards and honours

- Number of prestigious regional, national and international awards received by faculty/staff

- Research funding

- Relevance:

- Collegial process

- Quality of teaching and research

- Recognition of faculty at University and national level

- Viability of department research

4. Working Together and Engaged University

- Description:

- Non research revenue

- Operating budget ($)

- Total value of donations

- Engaged partnerships

- Number of clinical sites which have taken MPT students

- Number of geographical locations where clinical sites are situated

- Number of executive positions/leadership roles (on regional/national/international committees) held by faculty/staff

- Number of exchange students

- Number of visiting lecturers

- Number of other academic visitors (faculty, students)

- Non research revenue

- Relevance:

- Operational efficiency

- Endorsement of program by external bodies

- Partner participation in teaching mission

Appendix 2: Clear and Measureable Goals

The following MPT Program Goals have been developed through consensus and approved by Faculty Council:

Intake

- Each year, we expect to recruit a robust pool of qualified applicants from which to select a strong cohort of new MPT students:

- A minimum of 100 qualified applicants for 40 seats

- Of 40 seats, 5 designated seats (or more) will be filled by qualified applicants through the Education Equity Program for Aboriginal students

The Program

- A minimum of 90% of entering MPT students successfully complete the MPT Program.

- A minimum of 90% of graduates report being satisfied with their entire MPT educational experience.

Upon Graduation

- A minimum of 90% of responding surveyed graduates recommend the U of S MPT Program to others.

- A minimum of 90% of graduates pass both the written and clinical components of the national Physiotherapy Competency Exam on first attempt

In The Workforce

- A minimum of 90% of responding surveyed graduates successfully obtain employment in physical therapy.

- One year following graduation, a minimum of 90% of responding surveyed graduates reflect that they were prepared for entry-to-practice in physical therapy on graduation.

- A minimum of 90% of responding surveyed employers report U of S MPT graduates were prepared for entry-to-practice in physical therapy.

Appendix 3: MPT Curriculum Evaluation

The Executive of Curriculum Committee (ECC) in the School of Physical Therapy is composed of two full-time faculty members, the Academic Coordinator Clinical Education or Assistant Academic Coordinator Clinical Education, a Clinical Specialist - Administrative Coordinator (ex officio), a Curriculum Consultant (ex officio), and one student member from each year of the M.P.T. program (to be appointed by PTSS).

The ECC is one of several committees that reports directly to the School of Physical Therapy’s Faculty Council. Ad hoc direct and indirect lines of communication also exist between the ECC and Academic Affairs, Admissions Committee, Clinical Education Committee and Program Planning and Resource Advisory (PPRAC) Committee. These lines of communication provide the ECC and School of Physical Therapy faculty with many informal mechanisms for sharing of information regarding curriculum and curricular issues.

The objectives as documented in ECC Terms of Reference include:

- To monitor curriculum activities.

- To approve minor curricula changes.

- To provide ongoing direction and support to curriculum development and implementation.

In alignment with stated ECC objectives, the ECC is accountable for the ongoing process of monitoring, evaluating and continuous quality improvement for all aspects of the Master of Physical Therapy (M.P.T.) curriculum. The MPT Curriculum Advisory Committee is periodically consulted to assist in effectively meeting this mandate. The ECC reports on this process as part of the overall MPT Program Evaluation Plan.

Curriculum includes all of the experiences that individual learners have in the Master of Physical Therapy program. Curricular evaluation will include an analysis of the curriculum overall and each component individually. The purposes of M.P.T. curriculum evaluation include:

- To construct and interpret a clear overall view of what is happening in the M.P.T. program and compare this with curriculum intentions;

- To identify relative strengths and weaknesses of the M.P.T., as a basis for ongoing curriculum refinement and development;

- To determine the effectiveness of the curriculum in preparing learners to become:

- Competent specialists in physical therapy practice

- Ethical, compassionate, accountable health professionals

- Back to Document

- Life-long learners

- Evidence-based health professionals

- Educators

- Primary health care practitioners

- Intersectoral, collaborative health care practitioners

- Professional leaders

- To assist PPRAC regarding resource expenditures related to curriculum development and maintenance. For example, if several instructors identify that additional resources are needed for course implementation, this information will be submitted to PPRAC.

The curricular evaluation plan takes into account: vertical organization (sequencing of curricular content elements, e.g., depth and complexity as sequencing guides); linear congruence/horizontal organization (what courses should precede and follow others and which should be concurrent, e.g., novelty of information), and internal consistency (objectives, subject matter taught, learning activities used et cetera, and outcomes should be congruent with mission statement, philosophy, vision, and values) of the M.P.T. curriculum.

The dynamic nature of the physical therapy profession, internal and external factors and published documents such as: Accreditation Standards for Physiotherapy Education Programs in Canada, Essential Competency Profile for Physiotherapists in Canada, Canadian Alliance of Physiotherapy Regulators Examination BluePrint,, and research evidence (e.g., Physiotherapy Canada, Journal of Physical Therapy Education) assist the Executive of Curriculum Committee faculty in analyzing and evaluating the M.P.T. curriculum.

The curriculum is evaluated using a variety of methods:

- Review of M.P.T. student module evaluations

The courses, the overall flow, relevance and expectations of a module are evaluated by students using a standard M.P.T. module evaluation form. Circulation of feedback from module evaluations is determined by the ECC, as appropriate. Student feedback from each module is carefully reviewed by ECC, and the committee seeks to identify trends consistent with past student feedback on the same module from previous years. - Review of course evaluations conducted by instructors

Individual courses are periodically evaluated by faculty members and/or instructors using a standard course evaluation format. This course evaluation includes elements such as:- Curricular Outcomes addressed (cognitive level)

- Curricular Themes/Objectives addressed (cognitive level)

- Teaching methods and learning strategies

- Course evaluation procedures

- Use of pre-requisite courses

- Use of previous M.P.T. courses

- Instructors perception of the course

- Indication of which course objectives were met; if unmet, why

- Changes to stated course objectives

- Alignment with PEAC criteria

- Recommended changes to the course

- Review of vertical organization, linear congruence and internal consistency

- Regular review of items related to curriculum from graduate and employer survey results.

- Annual review of PCE exam results

- Ongoing review of Clinical Education Program

Appendix 4 - Tools used for Periodic Review (program and curriculum).

| Evaluation | Purpose | Frequency | Administered by … |

|---|---|---|---|

| Exit survey | To evaluate the MPT program, student experience, and employment prospects | Annually (Module 10) | PPRAC/Administration |

| Graduate survey | To evaluate the MPT program outcomes and to gather employment data. To fulfill accreditation requirements | Annually | PPRAC/Administration |

| Employer survey | To evaluate MPT program outcomes. To fulfill accreditation requirements |

Every three years | PPRAC/Administration |

| Student evaluation of teaching effectiveness | To evaluate course instructors | After every academic course | Administration |

| MPT module evaluation by students | To evaluate MPT modules | After every academic module | ECC/Administration |

| Student evaluation of clinical placements | To evaluate placements and clinical instructors during clinical courses. | After every placement | Clinical Education/Administration |

a School of PT Mission: “to achieve excellence in scholarly activities through teaching, research and clinical practice, and to maintain high quality academic physical therapy programs.”